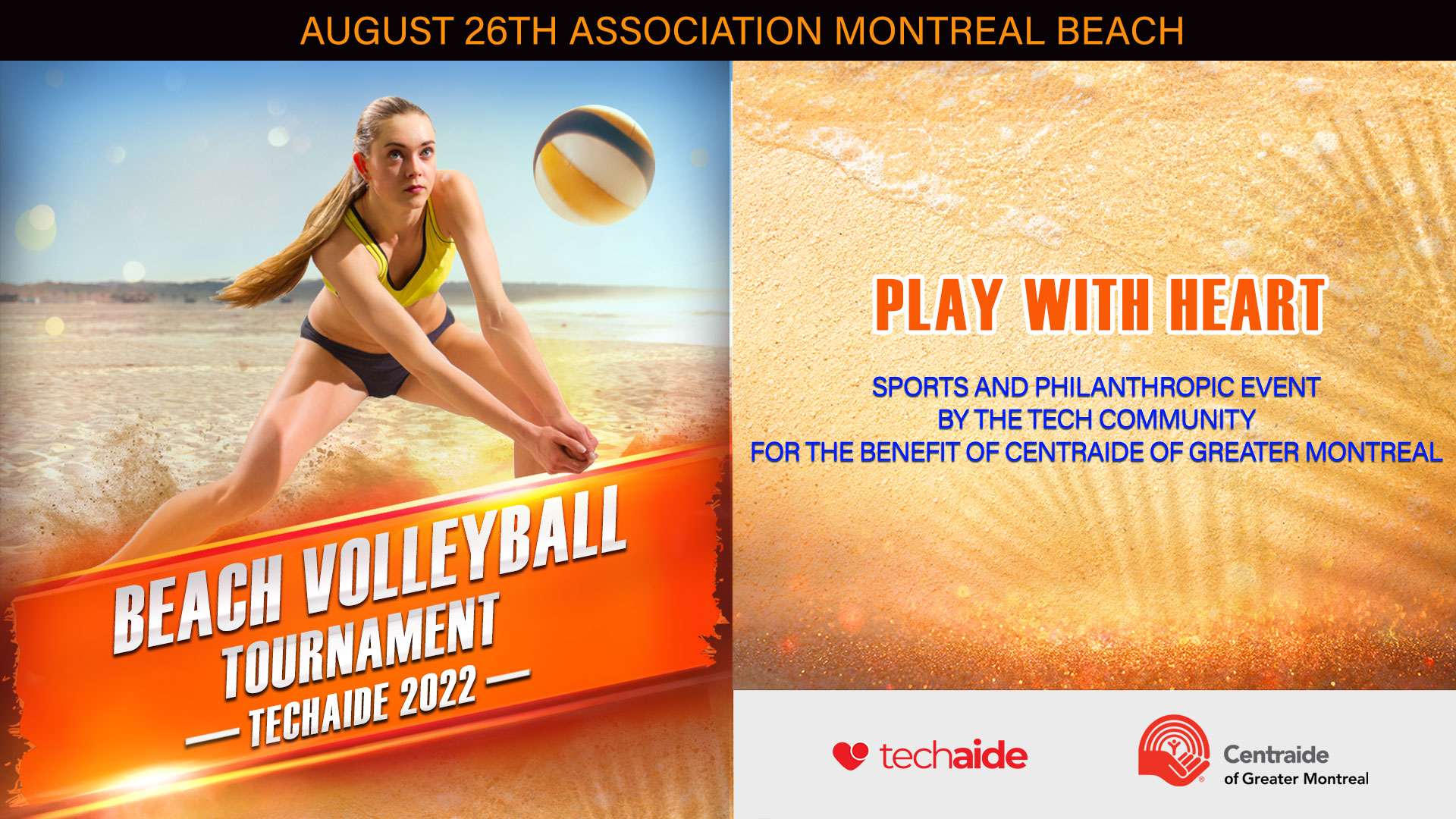

Event

5 AI Developments That Will Impact Your Startup

THIS ARTICLE WAS ORIGINALLY PUBLISHED ON THE REAL VENTURES BELIEVING BLOG ON MAY 9, 2019.

Takeaways from the 2019 TechAide AI Conference

While even a few years ago, artificial intelligence seemed fringe and cutting-edge, it’s already at the core of many of the technologies we use and will only become more integral to the tools and processes in every business and industry. Because of the stunning ability of algorithms to help us parse information and optimize processes, AI has the potential to democratize access to services from legal advice to healthcare to finance. For startups, in particular, keeping up with the new trends and research in AI will make the difference between a good idea and a world-altering product.

In this article, we have summarized some of the key takeaways from the TechAide AI conference led by Google Brain’s Hugo Larochelle on April 26, 2019. And while predicting the impact this research will have on startups is a bit like fortune-telling — something machine learning (ML) practitioners can appreciate — tomorrow’s leader will not only be influenced by these latest findings but also inspired by the overarching theme of the conference: giving back.

Here’s a fast-take on the day’s output…

Causation is Not Correlation

YOSHUA BENGIO, U DE M, MILA, IVADO

“We don’t have systems that learn sufficiently rich understandings of the world… that even a two-year-old has in terms of physics or psychology.”

— Yoshua Bengio on “A Meta-Transfer Objective for Learning to Disentangle Causal Mechanisms” or common sense in ML

THE GIST:

Bengio’s team’s insight was to look at how quickly a learner adapts to small changes in the frequencies of the objects it “encounters” in their distribution, while learning. By enabling a kind of “squirreling away” of some learned details, the agent can use that information later to “remind” itself of a past similar experience.

Running their “toy” two-dimensional experiment, tweaking the data in small ways, they were able to separate out the correct causal structure of the variables in the data. A lot of work is yet to be done, says Bengio, in developing this approach, particularly in the scaling of the experimental structures explored.

THE TAKEAWAY:

This research is defining the direction of the field, trying to relax what is usually considered a rigid assumption, to find a potent tool for discovery: causality. By accepting dependence but carefully scraping it away, the team encapsulates the pertinent knowledge to piece together the scaffold of causal relationships hidden in the data. Typically, in ML you have a dataset representing some variables, and then you massage, manipulate and transform it until your system produces the optimal desired result on novel data. But as we all know, language is interdependent — life is messy. If we can find a way to extract causal relationships in a robust way, just imagine the kinds of questions we can begin to answer about causal relationships.

THE POTENTIAL IMPACT:

World-altering. This is the type of artificial intelligence that the field’s pioneers have been working toward for decades and could help take us from the “soft” AI that we currently have to more robust deep learning models and artificial neural networks that are able to truly learn from past exposure.

Learning With Less Effort

NEGAR ROSTAMZADEH, ELEMENT AI

“It takes up to an hour for each image. For training a segmentation network, you need to have thousands of images. Wouldn’t it be great to learn a task with less annotation? […] Also, we have access to huge [amounts] of data which are not necessarily annotated for the needs we have. Wouldn’t it be great [to use] that information?”

— Negar Rostamzadeh on Learning with Less Labeling Effort, @TechAIDE 2019

THE GIST:

If you have any experience with datasets, you know that labelling takes a longtime. And computer vision uses lots of images. Finding smarter ways to label these images wouldn’t just be nice, it would have the potential to transform the field.

To conquer the drudgery (and ultimately cost) that is pixel-wise labelling, and segmenting images, Dr. Rostamzadeh and her colleagues devised novel point-level methods, setting new benchmarks for essential tasks. What’s more, by cunningly leveraging pre-labelled data from the Internet, she and her team showed that the method has legs.

THE TAKEAWAY:

The research defines a scalable technique that gets results. Time being measured in dollars, such tools will certainly cut costs. Further, by removing the time-consuming drudgery involved with labelling, humans, who are typically far more creative and ambitious than computers, will be freed up to do more interesting and analytical work.

THE POTENTIAL IMPACT:

Time lost is irreplaceable. Agile, Lean, or whatever your M.O., this kind of new efficiency has the potential to reduce major technical debt down the road. Further, lower costs in computer vision can translate into savings for consumers of products using this technology, with impacts from healthcare to transportation.

Context is Everything

JAMIE KIROS, GOOGLE BRAIN TORONTO

“A really common theme that I want you to remember in this talk is that meaning is not in language. The language indicates the meaning. […] A lot of our current methods that we’re using now assumes [the former] and ignores the more indicative components. It turns out by [not ignoring them] we can actually go a long way.”

— Jamie Kiros on “Grounding and Structure in Natural Language Processing”,

@TechAIDE 2019

THE GIST:

Natural Language Processing (NLP) is hard. As Kiros walked the audience through her work, she noted that “context is everything.” It’s what grounds the meaning of what is being communicated. It enables structures to be inferred. So why do researchers ignore it in most cases of Machine Learning? It’s because it’s that hard.

“Generalized Machine Translation (GMT)” refers to the set of ML problems that map a translation task to any other form or modality. Think: the usual translation between human languages, captioning images, interfacing with machines, and creating meaningful utterances. Many of these tasks go beyond the familiar (have you tried beatboxing with Google Translate?) but it’s work like Kiros’ that will get us there.

Rather than making the language model ever-bigger, or trying to force their models to deliver what they want through strong, programmatic constraints, Kiros and her colleagues gently nudge their models in the right direction by fiddling with the representations the model learns from. Representations are key elements of machine learning and you can think of them as the way data presents itself. For example, coordinate systems should be a familiar grouping of representations. In the rectangular system (remember x-axis, y-axis), the map f(x) = sin(x)is the rolling pattern of rise and fall no more than one unit. If we transform to or encode the inputs x in polar coordinates, it appears as a circle. To get back, we can decode just as easily. So information can be encoded and decoded in different ways, depending on desired outputs. By applying common NLP methods and changing only the encoder/decoder networks they achieved some promising results.

THE TAKEAWAY:

By avoiding the context of any communication, a very rigid constraint is set on the ability for any solution to sufficiently capture the richness of language. It’s hard to think about GMT without alluding to the Universal Communicator from Star Trek. It rarely met a language it couldn’t decode (“Darmok” ST:TNG ep 102). But in that aspirational world, Roddenberry was telling us his belief that we must find a way to understand one another if we want to find peace. After all, if the objective of solving GMT is to improve communication in general, we can no longer ignore context.

THE POTENTIAL IMPACT:

Companies working in natural language processing already know how challenging it is to gain a clear understanding of the mechanisms of living language, as avoiding the question of context makes clear. By taking strides toward enabling systems to infer context, there could be applications from customer service to translation to education with major democratization of information and access as a possible result.

AI to Diagnose Human Disease

JENNIFER CHAYES, MICROSOFT, NEW ENGLAND

“The human immune system knows about the diseases that we have. How do we decode that and find out about them? How can we use the immune system to fight cancer in a way that is so much more targeted with less collateral damage than chemo and radiation and the standard methods we use?”

— Jennifer Chayes, on minimizing the patient-impact of drug trials, @TechAIDE 2019

THE GIST:

Adaptive Biotechnologies, in conjunction with Dr. Chayes’ Boston Microsoft lab, is leveraging the noble T-cell’s “seek, multiply, and destroy” mechanism to change the face of how we diagnose and treat disease. First up, late-stage cancers and auto-immune diseases.

Adaptive was able to demonstrate that it is possible to predict with up to 95 percent accuracy which patient had a particular virus by using their profiled T-cell receptors. It is a bittersweet result, however, as their system didn’t generalize easily. In a new study, they will attempt to predict receptor binding energies of the 10¹² possible receptors to achieve a similar end, building on the work of Mark Davis at Stanford.

THE TAKEAWAY:

Chayes believes where ML has advantages over the traditional approaches of computational biology is the very nature of the problems. The sparseness of the data (think: how “locally” packaged is the data) is better suited to the ML tools and techniques over current methods.

But clinical research is costly — in particular, drug trials. In cancer immunotherapy, when standard treatments aren’t getting it done, designing the custom-for-you drug can run into million-dollar territory. But, as she explains, “what if you know [a drug] is not going to work [for a patient] or have bad side effects? If you pull those people out, you can get certain drugs that never would have made it through clinical trial approved for the right people.”

THE POTENTIAL IMPACT:

Advancing machine learning to tackle the highly specific, difficult computational problems that are at the intersection of molecular biology and computational science requires the cooperation of large institutions, researchers and startups. By working together for mutual benefit, there is the possibility of a massive payoff for society through reduced costs in treatment or even the eradication of diseases.

Machine Learning and Creativity

PABLO SAMUEL CASTRO, GOOGLE BRAIN LAB, MONTREAL

“I’m really interested in ways to incorporate [machine learning] techniques into live performances, which introduces a whole new level of complexities because I play with other musicians and I can’t be like ‘Ok. Hold on. The model is doing it’s inference… ok, now we can continue.’ It really has to adapt to whatever is happening around it and it has to sound good because I’m usually playing in front of a paying audience.”

— Pablo Samuel Castro on the potential of live jamming with algorithms

@TechAIDE 2019

THE GIST:

Google has a rich history of making art with their AI projects: think dreamscape horrors meets Van Gogh or the first AI-powered Google Doodle in celebration of JS Bach. It is indeed, as Castro beams, “delightfully silly”. A whimsical salute to the giant of Baroque music, it showcases the power of an off-the-shelf model to not just create something unique, but for Castro it was also “a really creative and useful way of getting people in touch in a hands-on fashion with machine learning technologies”.

THE TAKEAWAY:

AI isn’t going to kill the Youtube star, as Castro demonstrated live, jamming with a network on-stage. It represents a new era of creative pursuit, and at the core, reminds us that human beings are creators. All art is rule-breaking of some kind. Machine learning, Castro argues, is just another tool that we can use to break those rules wide open.

THE POTENTIAL IMPACT:

This has the potential to break down walls for artists, enabling creators to build incredible art with the aid of inexpensive tools that formerly only the major record labels or well-funded, “famous” artists had access to. Further, it’s fun. The future of humanity isn’t all about optimization and financial returns. Ultimately, humans will continue to produce and consume art regardless of the practicality or necessity of it, and as we know, there will always be opportunity for innovators who put smiles on people’s faces.

Real Ventures is proud to support TechAide, which raised more than $165,000 for Centraide of Greater Montreal through the 2019 TechAide AI conference.